- Home

- Manufacturing

- Manufacturing

- OpenAI and Microsoft Sentinel Part 2: Explaining an Analytics Rule

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Welcome back to our series on OpenAI and Microsoft Sentinel! Today we will explore another use case for OpenAI's wildly popular language model and take a look at the Sentinel REST API. If you don't already have a Microsoft Sentinel instance, you can create one using a free Azure account and follow the Sentinel onboarding quickstart. You'll also need a personal OpenAI account with an API key. Ready? Let's get started!

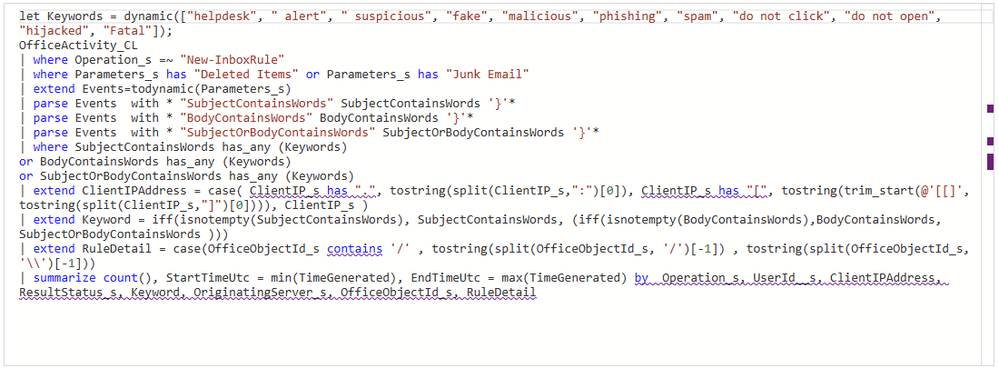

One of the tasks that security practitioners face is how to rapidly consume analytics rules, queries, and definitions to understand why an alert was triggered. For example, here is an example of a relatively short Microsoft Sentinel analytics rule written in Kusto Query Language (KQL):

Seasoned KQL operators won't have any difficulty parsing it out, but it will still take a few moments to mentally map out the keywords, log sources, operations, and event logic. Someone who has never worked with KQL might need a few more minutes to understand what this rule is designed to detect. Fortunately, we have a friend who is extremely good at reading code and explaining it in natural language - OpenAI's GPT3!

GPT3 engines such as DaVinci are quite good at explaining code in natural language and have been extensively trained on Microsoft Sentinel KQL syntax and usage. Even better, Sentinel has a built-in OpenAI connector that allows us to integrate GPT3 models in automated Sentinel playbooks! We can use this connector to add a comment to our Sentinel incident describing the Analytics Rule. This will be a straightforward Logic App with a linear action flow:

Let's walk through the logic app step by step, starting with the trigger. We are using a Microsoft Sentinel Incident trigger for this playbook so that we can extract all related Analytic Rule IDs from the incident using the Sentinel connector. We will use the Rule ID to look up the rule query text using the Sentinel REST API, which we can pass to the AI model in a text completion prompt. Finally, we will add the AI model's output to the incident as a comment.

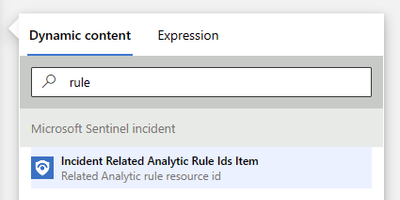

Our first action is a "For each" logic block that acts on Sentinel's "Incident Related Analytic Rule Ids Item":

Next, we need to use the Sentinel REST API to request the scheduled alert rule itself. This API endpoint is documented here: https://learn.microsoft.com/en-us/rest/api/securityinsights/stable/alert-rules/get?tabs=HTTP. If you haven't used the Sentinel API before, you can click the green "Try it" button next to the code block to preview the actual request using your credentials. It's a great way to explore the API! In our case, the "Get - Alert Rules" request looks like this:

GET https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.OperationalInsights/workspaces/{workspaceName}/providers/Microsoft.SecurityInsights/alertRules/{ruleId}?api-version=2022-11-01

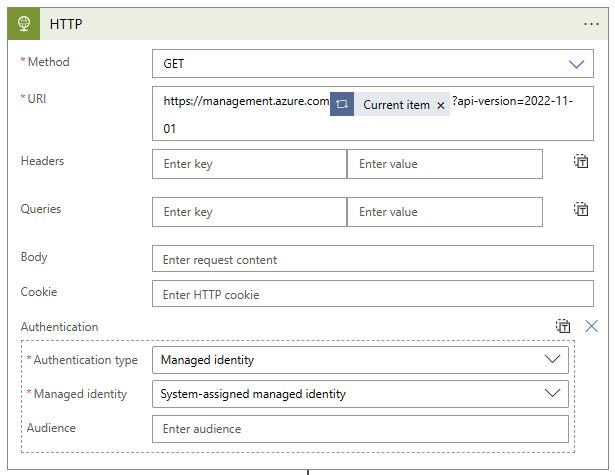

We can make this API call using a "HTTP" action in our Logic App. Fortunately, the Incident Related Analytic Rule Ids Item that we just added to the "For each" logic block comes with nearly all of the parameters prefilled. We just need to prepend the API domain and add the version specification to the end - all the parameters for the subscriptionID, resourceGroupName, workspaceName, and ruleId will come from our dynamic content object. The actual text of my URI block is as follows:

https://management.azure.com@{items('For_each_related_Analytics_Rule_ID')}?api-version=2022-11-01

We'll also need to configure the authentication options for the HTTP action - I'm using a managed identity for my Logic App. The finished action block looks like this:

Now that we have our alert rule, we need to parse out just the rule text so that we can pass it to GPT3. Let's use a Parse JSON action, give it the Body content from our HTTP step, and define the schema to match expected output from this API call. The easiest way to generate a schema is to upload a sample payload, but we don't need to include all of the properties that we are not interested in. I've shortened my schema to look like this:

{

"type": "object",

"properties": {

"id": {

"type": "string"

},

"type": {

"type": "string"

},

"kind": {

"type": "string"

},

"properties": {

"type": "object",

"properties": {

"severity": {

"type": "string"

},

"query": {

"type": "string"

},

"tactics": {},

"techniques": {},

"displayName": {

"type": "string"

},

"description": {

"type": "string"

},

"lastModifiedUtc": {

"type": "string"

}

}

}

}

}

So far, our logic block looks like this:

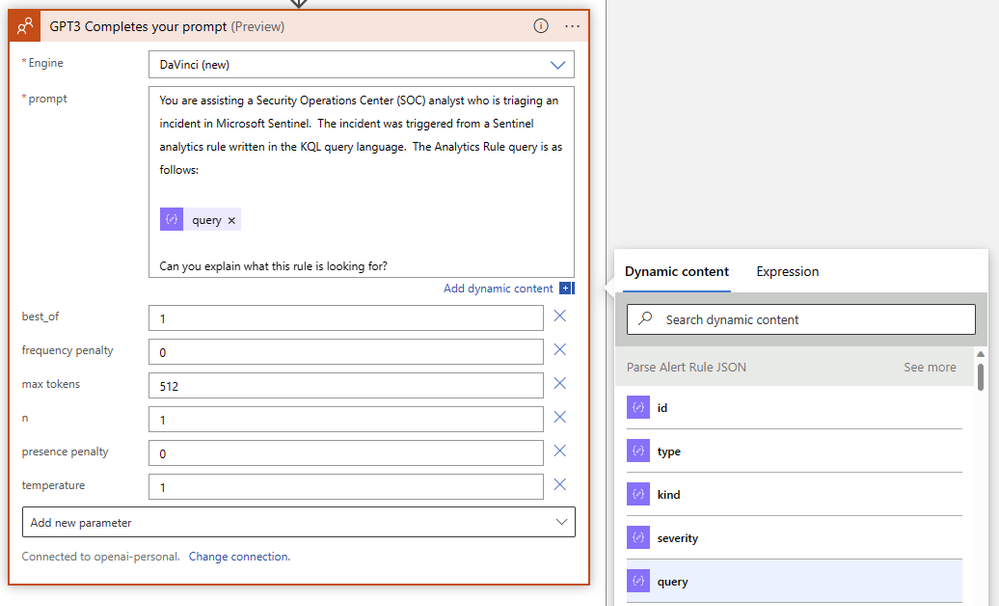

Now it's time to get the AI involved! Choose "GPT3 Completes your prompt" from the OpenAI connector and write up your prompt, using the "query" dynamic content object from the previous Parse JSON step. We'll use the latest DaVinci engine and leave most of the default parameters. Our query doesn't show a significant difference between high and low temperature values, but we do want to increase the "max tokens" parameter to give the DaVinci model a little more room for long answers. The finished action should look like this:

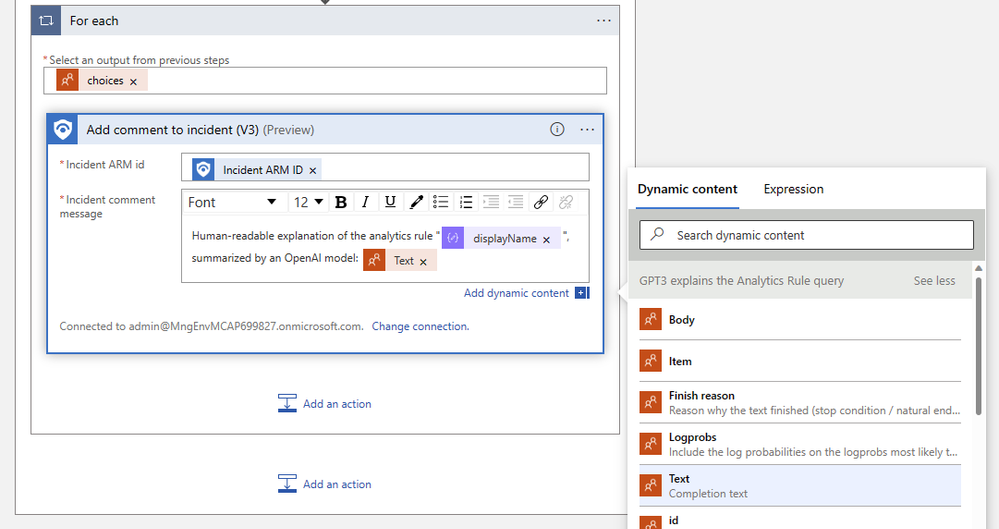

The final step in our playbook will add a comment to the incident using GPT3's result text. If you'd like to add an incident task instead, just select that Sentinel action instead. Add the "Incident ARM ID" dynamic content object and compose the comment message using the "Text (Completion text)" output from the GPT3 action. The logic app designer will automatically wrap your comment action in a "For each" logic block. The finished comment action should look similar to this one:

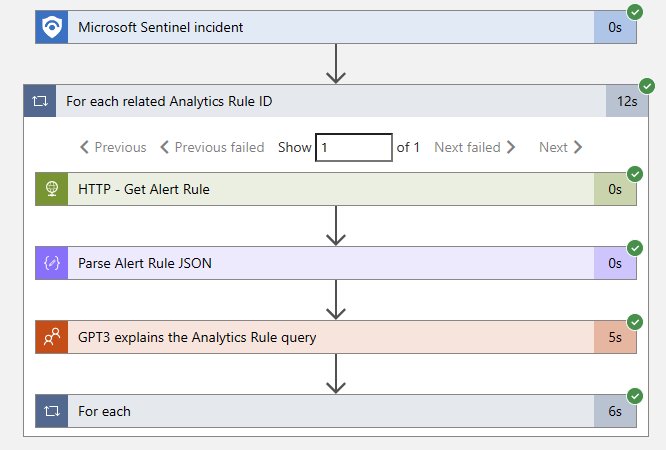

Save the logic app, and let's try it out on an incident! If all goes well, our Logic App run history will show a successful completion. If anything goes wrong, you can check the exact input and output details for each step - an invaluable troubleshooting tool! In our case, it's green checkmarks all the way down:

Success! The playbook added a comment to the incident, saving our overworked security analyst a few extra minutes.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.