How the Bit Was Born: Claude Shannon and the Invention of Information

By Maria Popova

“All things physical are information-theoretic in origin and this is a participatory universe… Observer-participancy gives rise to information,” the pioneering theoretical physicist John Wheeler asserted in his visionary It for Bit concept in 1989. But what exactly is the bit, this elemental unit of participatory sensemaking, and how did it come to permeate our consciousness?

That’s what James Gleick examines in a portion of The Information: A History, a Theory, a Flood (public library) — his now-classic chronicle of how technology and human thought co-evolved, which remains one of the finest, sharpest books written in our century, full of ideas fertilized by the genius of a singular seer and incubated in the nest of time and culture into ever-growing prescience.

The story of the bit began in 1948, when Bell Labs invented the transistor — a technology that would go on to earn its inventors the Nobel Prize and alter nearly every aspect of modern life. In 1948, the air was aswirl with technologically-fomented cultural tumult — that selfsame year, the great nature writer Henry Beston was calling for reclaiming our humanity from the tyranny of technology, while across the Atlantic the German philosopher Josef Pieper was penning his timely manifesto for resisting the cult of productivity. But the most significant revolution that year was being set into motion by a crank invisible to the public eye, thanks to a young engineer named Claude Shannon (April 30, 1916–February 24, 2001).

Gleick writes:

An invention even more profound and more fundamental [than the transistor] came in a monograph spread across seventy-nine pages of The Bell System Technical Journal in July and October. No one bothered with a press release. It carried a title both simple and grand — “A Mathematical Theory of Communication” — and the message was hard to summarize. But it was a fulcrum around which the world began to turn. Like the transistor, this development also involved a neologism: the word bit, chosen in this case not by committee but by the lone author, a thirty-two-year-old named Claude Shannon. The bit now joined the inch, the pound, the quart, and the minute as a determinate quantity — a fundamental unit of measure.

But measuring what? “A unit for measuring information,” Shannon wrote, as though there were such a thing, measurable and quantifiable, as information.

Culture then wasn’t as bedeviled by short-termism and compulsive pragmatism as it is today. Venerated thinkers were making the case for “the usefulness of useless knowledge” and even the commercial bastions of technology saw beyond the immediate practical application of their work. At AT&T, where Claude Shannon worked, employees were encouraged to make, as Gleick puts it, “detours into mathematics or astrophysics with no apparent commercial purpose.” Shannon made his particular detour into a field yet unnamed, strewn with questions and possibilities, which he would soon christen “information theory” and which would go on to become the underlying sensemaking framework of modern consciousness.

Shannon’s contribution was an embodiment of Schopenhauer’s definition of genius — he was simmered in the same cultural stew as his peers and contemporaries, lived with the same histories as everyone else, but he saw something no one else saw: a potential, a promise, and, finally, a possibility. Gleick writes:

Everyone understood that electricity served as a surrogate for sound, the sound of the human voice, waves in the air entering the telephone mouthpiece and converted into electrical waveforms. This conversion was the essence of the telephone’s advance over the telegraph — the predecessor technology, already seeming so quaint. Telegraphy relied on a different sort of conversion: a code of dots and dashes, not based on sounds at all but on the written alphabet, which was, after all, a code in its turn. Indeed, considering the matter closely, one could see a chain of abstraction and conversion: the dots and dashes representing letters of the alphabet; the letters representing sounds, and in combination forming words; the words representing some ultimate substrate of meaning, perhaps best left to philosophers.

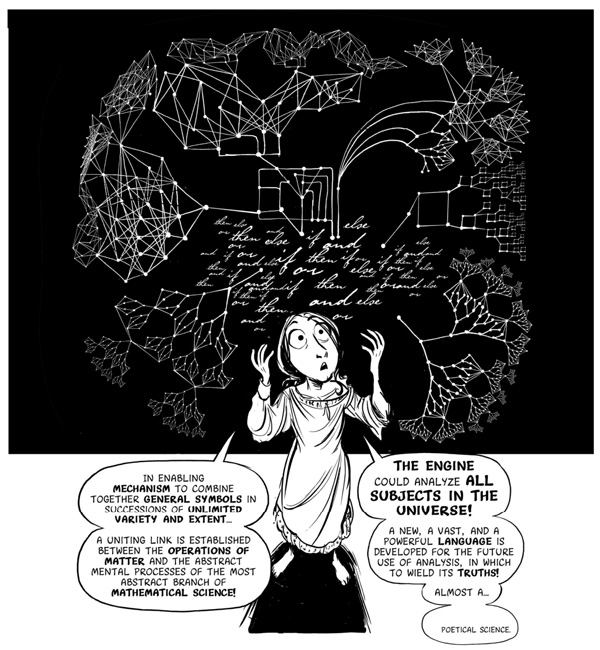

Shannon, like computing pioneer Alan Turing, was particularly enchanted by the “chain of abstraction and conversion” entailed in cryptography and puzzle-solving. As a junior research assistant at MIT, he had worked on building the Differential Analyzer — a primitive computer with cogs and gears envisioned by Vannevar Bush a century after Charles Babbage and Ada Lovelace’s groundbreaking Analytical Engine. (Bush, arguably, had laid the ideological foundation for information theory three years before Shannon’s invention of the bit in his prophetic essay “As We May Think.”) When Turing himself visited Bell Labs in 1943, he occasionally lunched with Shannon and the two traded speculative theories about the future of computing. Shannon’s ideas impressed even a mind as unusual and far-seeing as Turing’s, who marveled about his American friend:

Shannon wants to feed not just data to a Brain, but cultural things! He wants to play music to it!

Gleick considers the singular way in which Shannon set about making sense of his era, his culture, and his own mind:

Logic and circuits crossbred to make a new, hybrid thing; so did codes and genes. In his solitary way, seeking a framework to connect his many threads, Shannon began assembling a theory for information.

The raw material lay all around, glistening and buzzing in the landscape of the early twentieth century, letters and messages, sounds and images, news and instructions, figures and facts, signals and signs: a hodgepodge of related species. They were on the move, by post or wire or electromagnetic wave. But no one word denoted all that stuff. “Off and on,” Shannon wrote to Vannevar Bush at MIT in 1939, “I have been working on an analysis of some of the fundamental properties of general systems for the transmission of intelligence.”

But the word intelligence was old — so old that it was encrusted with the patina of multiple meanings as each civilizational epoch appropriated it for a particular use. Shannon’s direction of thought needed a new semantic packet — if not a linguistic blank slate, then at least a term that could be newly inscribed with original and singular meaning. One word bubbled up the ranks of the Bell Labs engineers to take on this function: information.

Gleick explains:

For the purposes of science, information had to mean something special. Three centuries earlier, the new discipline of physics could not proceed until Isaac Newton appropriated words that were ancient and vague — force, mass, motion, and even time — and gave them new meanings. Newton made these terms into quantities, suitable for use in mathematical formulas. Until then, motion (for example) had been just as soft and inclusive a term as information. For Aristotelians, motion covered a far-flung family of phenomena: a peach ripening, a stone falling, a child growing, a body decaying. That was too rich. Most varieties of motion had to be tossed out before Newton’s laws could apply and the Scientific Revolution could succeed. In the nineteenth century, energy began to undergo a similar transformation: natural philosophers adapted a word meaning vigor or intensity. They mathematicized it, giving energy its fundamental place in the physicists’ view of nature.

It was the same with information. A rite of purification became necessary.

And then, when it was made simple, distilled, counted in bits, information was found to be everywhere. Shannon’s theory made a bridge between information and uncertainty; between information and entropy; and between information and chaos. It led to compact discs and fax machines, computers and cyberspace, Moore’s law and all the world’s Silicon Alleys.

[…]

We can see now that information is what our world runs on: the blood and the fuel, the vital principle … transforming every branch of knowledge.

The Information remains an indispensable read in its totality. Complement it with some thoughts on what wisdom means in the age of information, Walter Benjamin on the tension between the two, the story of how one Belgian visionary paved the way for the Information Age, and why Ada Lovelace merits being considered the world’s first computer programmer, then revisit Gleick on the origin of our anxiety about time and the story behind Newton’s famous “standing on the shoulders of giants” metaphor for knowledge.

—

Published September 6, 2016

—

https://www.themarginalian.org/2016/09/06/james-gleick-the-information-claude-shannon/

—

ABOUT

CONTACT

SUPPORT

SUBSCRIBE

Newsletter

RSS

CONNECT

Facebook

Twitter

Instagram

Tumblr